Some codes (I wrote) that can be freely used for noncommercial purposes. Please comply with the copyright notice and acknowledge the use.

GNP: Graph Neural Preconditioner

Download the PyTorch codes in GitHub

Download the PyTorch codes in GitHub

This is a PyTorch implementation of GNP. It is a graph neural network that can serve as a preconditioner for solving linear systems. For more information, see the paper Graph Neural Preconditioners for Iterative Solutions of Sparse Linear Systems.

APAM: Asynchronous Parallel Adaptive stochastic gradient Method

Download the C++-MPI codes in GitHub

Download the C++-MPI codes in GitHub

This is a C++-MPI implementation of the parallel optimizer APAM. It is used for training neural networks and enjoys linear speedup theoretically with respect to the number of workers. For more information, see the paper Parallel and Distributed Asynchronous Adaptive Stochastic Gradient Methods.

FastGCN: Method and Theory

Download the Matlab codes in GitHub

Download the Matlab codes in GitHub

[Update: Several years after the publication of FastGCN, Gabe Mancino-Ball (a student I advised) implemented a modern version of it in PyTorch, with deliberation of several algorithmic choices of the method and a demonstration of numerical results. You may download the PyTorch codes from this GitHub.]

This suite of codes includes a Matlab implementation of FastGCN, as well as companion codes for the optimization theory paper that explains stochastic gradient descent with biased but consistent gradient estimators. The Matlab code for FastGCN is observed to be substantially faster than other implementations in Tensorflow or PyTorch. For more information, see the papers FastGCN: Fast Learning with Graph Convolutional Networks via Importance Sampling and Stochastic Gradient Descent with Biased but Consistent Gradient Estimators.

RLCM: Recursively Low-Rank Compressed Matrices

Download the C++ codes in GitHub

Download the C++ codes in GitHub

This library offers the low-level linear algebra routines for recursively low-rank compressed matrices, as well as the high-level application routines for Gaussian processes and kernel methods. For more information, see the papers Linear-Cost Covariance Functions for Gaussian Random Fields and Hierarchically Compositional Kernels for Scalable Nonparametric Learning.

TreeCodeMatern

Download the C++ codes in the ScalaGAUSS website

Download the C++ codes in the ScalaGAUSS website

This is an MPI program for computing the matrix-vector product with the Matern kernel. It is based on a tree code algorithm proposed in the SISC paper A Fast Summation Tree Code for Matern Kernel. The implementation is documented in A Parallel Tree Code for Computing Matrix-Vector Products with the Matern Kernel.

ScalaGAUSS

Download the Matlab and C++ codes in the ScalaGAUSS website

Download the Matlab and C++ codes in the ScalaGAUSS website

ScalaGAUSS is a set of scalable tools for analyzing large-scale spatiotemporal data modeled as a Gaussian process/random field. The project addresses the computational challenges in statistical data modeling and analysis of the data in a very large scale. A number of publications arose from this project. For more information please see the project website.

Block Preconditioned Conjugate Gradient

Download the Matlab codes

(version: 2011.04.08)

Download the Matlab codes

(version: 2011.04.08)

This program is good for prototyping and proof of concepts.

Computing $f(A)b$ via Least Squares Polynomial Approximations

Download the Matlab codes

(version: 2010.11.10)

Download the Matlab codes

(version: 2010.11.10)

This is a Matlab program for computing a function of a large sparse matrix times a vector. The underlying technique is a least squares polynomial approximation to the function, and the method can be applied to a general arbitrary function. For more information, see the SISC paper Computing $f(A)b$ via Least Squares Polynomial Approximations.

Fast Approximate kNN Graph Construction for High Dimensional Data

Download the C++ codes

(version: 2009.10.13)

Download the C++ codes

(version: 2009.10.13)

This is a C++ program that efficiently computes an approximate kNN graph for high dimensional data via divide and conquer. For more information, see the JMLR paper Fast Approximate $k$NN Graph Construction for High Dimensional Data via Recursive Lanczos Bisection.

Low Rank Orthogonal Approximation of Tensors

Download the Matlab codes

(version: 2009.01.20)

Download the Matlab codes

(version: 2009.01.20)

This toolkit provides Matlab functions to compute the low rank orthogonal approximation of a given tensor. For more information, see the SIMAX paper On the Tensor SVD and the Optimal Low Rank Orthogonal Approximation of Tensors.

Sparse Matrix SVD

Download the Matlab codes

(version: 2008.12.10)

Download the Matlab codes

(version: 2008.12.10)

This toolkit provides a Matlab function that has a similar functionality to the standard svds() function, but consumes much less memory and runs significantly faster than svds() for sparse matrices and large dense matrices. The computation of the SVD is based on the Lanczos algorithm. The toolkit also provides a similar function for those low-rank-modified matrices. The provided functions are not meant to replace the standard svds() function for two reasons: (1) The provided functions can only compute the largest/smallest singular values/vectors, but not other ones (although modifications to meet such requirements are easy); (2) The provided functions have almost no error checks so one has to use them at caution.

Known issues: The codes were originally developed for large scale data analysis tasks (such as dimensionality reduction), where the largest singular components are in concern. The underlying algorithm is known to be not good for computing the smallest components.

Convex Quadratic Program with Box Constraints

Download the Matlab codes

(version: 2011.07.08)

Download the Matlab codes

(version: 2011.07.08)

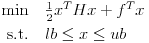

This is a handy Matlab function that solves the quadratic program with box constraints

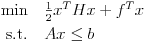

where H is positive definite. The function is implemented based on the two-metric projection method, specifically tailored for the positive definite Hessian. This function outperforms the general purpose Matlab function quadprog(). By duality, it *may* also solve convex quadratic programs with linear constraints

This code was the prototype implementation in the paper Y. Sheng, T. Yapo, and B. Cutler. Global Illumination Compensation for Spatially Augmented Reality. Eurographics 2010.